Editor's note: This is a guest post from Ken Fromm and Paddy Foran at Iron.io. Iron.io's services are designed for building distributed cloud applications quickly and operating at scale.

Platform as a Service has transformed the use of cloud infrastructure and drastically increased cloud adoption for common types of applications, but apps are becoming more complex. There are more interfaces, greater expectations on response times, increasing connections to other systems, and lots more processing around each event. The next shift in cloud development will be less about building monolithic apps and more about creating highly scalable and adaptive systems.

Don’t get us wrong, developers are not going to go around calling themselves systems engineers any time soon but at the scale and capabilities that the cloud enables, the title is not too far from the truth.

Platforms as Foundation

It makes sense that platforms are great for framework-suited tasks – receiving requests and responding to them – but as the web evolves, more complex architectures are called for, and these architectures haven’t yet evolved in an equivalent manner as all encompassing framework-centered applications.

By way of example, apps are rapidly evolving away from a synchronous request/response model towards a more asynchronous/evented model. The reason is because users are demanding faster response times and more immediate data. Also, more actions are being triggered by each event.

Rather than thinking of the request and response as the lifecycle of your application, many developers are thinking of each request loop as just another set of input/output opportunities. Your application is always-on, and by building your architecture to support events and process responses asynchronously and highly concurrently, you can increase throughput and reduce operational complexity and rigidity substantially.

Building N-Tier Applications

The traditional framework-centered application is a two-tier application. You have an application tier, which runs your application’s software, and then you have your database servers, which store your data. At some point, you might start adding background processing that hands off of your application tier.

Cloud applications, however, are quickly moving away from this traditional two-tier architecture that then starts growing organically out of need towards a proper n-tier architecture at the onset. These additional tiers – the specialised components that help an application escape the request/response loop – provide alternative computing, storage, and process orchestration to handle the growing set of response needs.

Independent processing components operation in the background, for example, might make use of these resources to perform specific actions – resizing images, sending emails and notifications, creating a bridge or buffer between processes, or doing any sort of variable-length processing.

Message queues often form the backbone to help orchestration these event actions. They have usually been introduced after the first generation architecture when an app needs to expand and scale. In brief, they provide asynchronicity, task dispatch, event buffering, and process separation. But they are such basic components – the High Scalability blog likens them to an arch or beam in building design – that they should be built into architectures from the start.

Incorporating additional computing resources into your IT stack ensures you not only have immediate scalability at hand but also a robust mesh of response services that communicate over loose APIs. This allows you to swap out, modify, or upgrade individual processes and computing modules at will – even while your application is running – without affecting other components.

Decoupling Your Application

Once you decide to move away from a monolithic application to an n-tiered one, the process of actually converting the application can be as gradual or as abrupt as you like. Because each piece speaks its own informal API, and doesn’t care about the other pieces, it makes no difference whether you overhaul your application all at once, or slowly transition it piecemeal.

The general rule of thumb for determining whether a piece of functionality should be broken out of the main application is “Is it absolutely necessary that the user wait for this action to be completed before we can give them a response?”

Things like resizing images, scraping web pages, and interacting with APIs take a long, sometimes variable, amount of time, so you shouldn’t make the user wait for them. They’re good candidates for being broken out into their own processing elements.

Once you’ve identified these elements, then reduce them to reusable pieces but have them operate independent of any other pieces. Right from the start you’ll want to loosely connect these “services” using message queues and worker systems. The benefit is each service will be independent of any other processes and so they can more easily change and adapt without adversely impacting other parts of the system. Events can be routed to multiple message queues so that additional actions can easily and transparently be added to specific events. Message queues also create buffers so that differences in processing throughput among the components can be handled efficiently and allowing each worker class to be scaled out independently.

Breaking things down into components will depend on the specific functionality, but there are a few tools you can use:

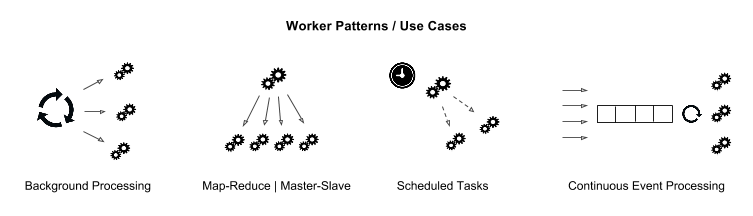

Workers

Workers provide the muscle in these distributed systems; they are the processing power that actually gets things done. Workers generally provide for custom-built environments that only survive for the duration of the task – whatever work needs to be done, like resizing a single picture. Then the environment is destroyed, and rebuilt for the next task.

The benefit of workers are their flexibility; because they’re highly reproducible and self-contained, workers can be scaled effortlessly to meet demand. The weakness of workers that needs to be architected around is their startup costs; because each task requires a new environment to be built, it can be expensive to set up complex environments. To work around this, we recommend batching tasks into groups that can be performed in the same environment.

The other thing customers usually need to wrap their heads around is that tasks don’t get executed immediately – they’re things that will happen at some point in the future. While there are some user-visible actions that it’s okay to offload to workers – forking GitHub repos, for example, happens in a worker – generally you want to keep actions that the user specifically requests in the request/response loop.

→ IronWorker, Heroku Worker Dynos, Celery, and Sidekiq are good options to look at for building workers into your architecture.

Message Queues

Message queues are the bonds that tie distributed architectures together. They’re used to transmit data between the component parts of the architecture. The general concept is that chunks of data (messages) are put into a group (a queue) then removed from that group in a predefined order, either FIFO (first in first out) or FILO (first in last out). Message queues generally come in two flavours: pull queues and push queues.

Pull queues are queues that ask clients to periodically check for messages on a queue. If a message is found, the queue will give it to the client and remove it from the queue. If a message is not found, the client just tries again after a set period of time. Pull queues are great for data flows that are heavy or consistent, because there’s a high chance that the request will yield a message, not a waste of bandwidth and processing power, but the queue won’t bring down the client by delivering more messages than the client can handle.

Push queues are queues that inform subscribers when a message is added to the queue. This means that data is processed more promptly, because subscribers know about it immediately, but it also means that the queue and client have to do more work, sending and receiving the data. Push queues are great for data flows that are sporadic, because the data comes infrequently. Knowing about the data as soon as it comes is worth the tradeoff of the minimal increase in extra processing.

→ IronMQ, Beanstalkd, and CloudAMQP are good options for message queues that will connect your architectures.

For more information on uses of a message queue, check out this post on Top 10 Uses of a Message Queue.

Key-Value Datastores

Key-value datastores are the simple memory and counters behind distributed systems. They’re used to store and index the data that workers create or need. The difference between a key-value datastore and a queue is that queue data is tied to a specific task – it’s meant to be processed once, then forgotten.

Key-value data typically lives outside of the task lifecycle – it can be shared between many tasks and survive after processing. This is useful for storing results or communicating shared information that is not task-specific. The benefit of caches is that they provide an easy, predictable interface for accessing and storing data. The drawback is that not all caches are concurrency-safe, so it’s possible to corrupt data if two or more clients are trying to write to the same key at the same time. This can be architected around by generating IDs that will only be used by a single client, but it’s something to keep in mind.

→ IronCache, Memcached, and Redis are good options for datastores that will keep your data handy and safe while you process it or share data between tasks.

The Right Tools | The Right Services

The large marketplace of cloud applications that have integrated with Heroku as add-ons are indispensable tools in this kind of architecture. These single-minded components require no standup or provisioning, and they also require no maintenance from the developer. They just work.

Besides the peace of mind this buys – which, in its own right, is worth it – this specialization allows the services to be specialised and feature-rich, moreso than if they were all implemented by the developer. Orthogonal, flexible components are hard to make, and diluting a developer’s attention with the plumbing will detract from their application – the thing they actually care about making.

At Iron.io, we’re big fans of using elastic cloud services as components. Our mindset from the beginning as early cloud developers has been to use service components wherever possible, so as to focus on our core competencies. It’s probably not surprising that we ended up making cloud services for other developers to use.

Start breaking your monolithic apps into their composite pieces today. You’ll be glad you did. And if you need help, read more about loose-coupled architectures and strategies on the Iron.io blog. Our engineering team is always happy to talk about scalable architectures.